CSIG 2022 Competition on Invoice Recognition and Analysis

Language: English | 中文

- Introduction

- Schedule

- Final Ranking List

- Award

- Dataset

- Submission

- Evaluation

- Terms and Condition

- Organizers

-

Introduction

Invoice recognition is a very important part of financial office automation, which aims to automatically extract predetermined content (such as date and amount) from scanned invoices. Due to the problems of various layouts, blurred handwriting, and misplaced text in scanned tickets, it is a very challenging task to accurately locate, identify and structure the fields of the invoices.

This time we released the invoice analysis and identification challenge. This competition is related to cutting-edge technologies such as computer vision, natural language processing, and multimodal fusion, and has great technical value and practical social application value. We sincerely invite the vast number of algorithm experts and technology enthusiasts to participate, to design better invoice recognition algorithms, and to further promote the implementation of OCR in real production scenarios.

We use the CodaLab as the competition platform. Participants need to register to take part in the competition. Please, follow the instructions: 1) Sign up in CodaLab. 2) Join in CodaLab to the Invoice Analysis and Identification Challenge. Just click in the “Participate” tab for the registration. Detailed rules are listed in section of "Terms and Condistion". -

Schedule

Preliminary Contest:

- 11th April 2022 : Registration Open For All Participants

- 18th April 2022: Dataset Open For Download

Notice: the dataset link can be found in codalab.

Notice: Recently we found that codalab website cannot propoerly display the competition information. For those who haven't downloaded training dataset and testA images, please email us (qiaoliang6@hikvision.com) with your participant team id to obtain the download links. If the problem of codalab cannot be fixed during the second round, we will also release the dataset links via email.

- 18th April 2022 to 23th June 2022: Preliminary Contest A Evaluation

- 24th June 2022 to 26th June 2022: Preliminary Contest B EvaluationFinal Contest:

- 27th June 2022 to 7th July 2022: Winner Scheme Verification (Review the code and anti-cheating situation, and reproduce the results)

- 8th July 2022: Final defense (Online Reply), announcement of winning results -

Final Ranking List

Rank Team name Organization 1 SECAI Institute of Information Engineering,Chinese Academy of Sciences 2 DataGrand DataGrand Information Technology (Shanghai) Co., Ltd. 3 Dialga South China University of Technology 4 naiveocr Shanghai Jiao Tong University 5 bestpay BestPay -

Award

The final rank will be based on the score of the Final defense (testB). There will be:

- 1 first Prize ¥20000

- 2 second Prize ¥10000

- 2 third Prize ¥5000

All the above amounts are pre-tax. -

Dataset

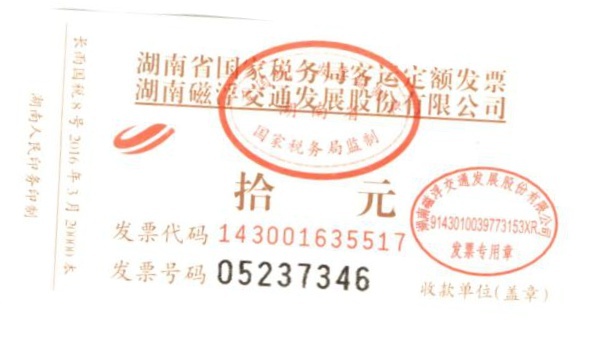

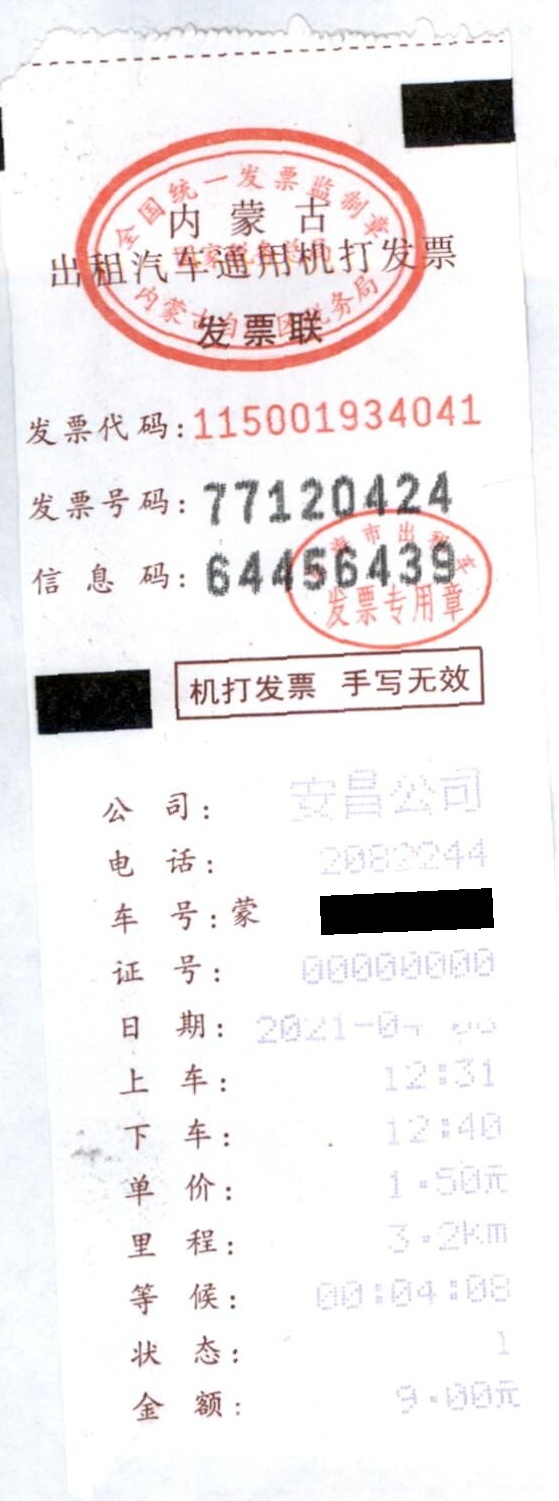

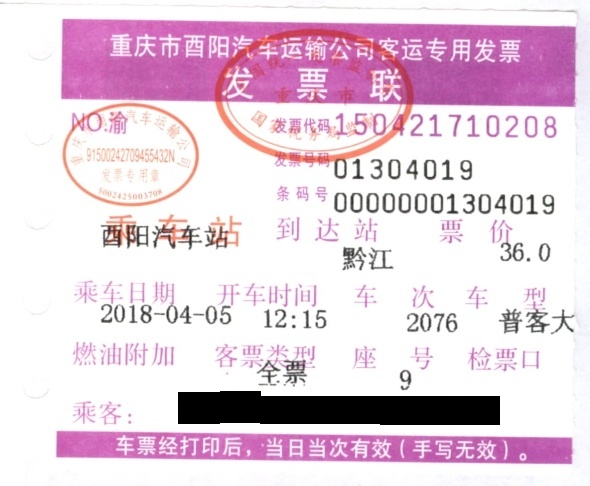

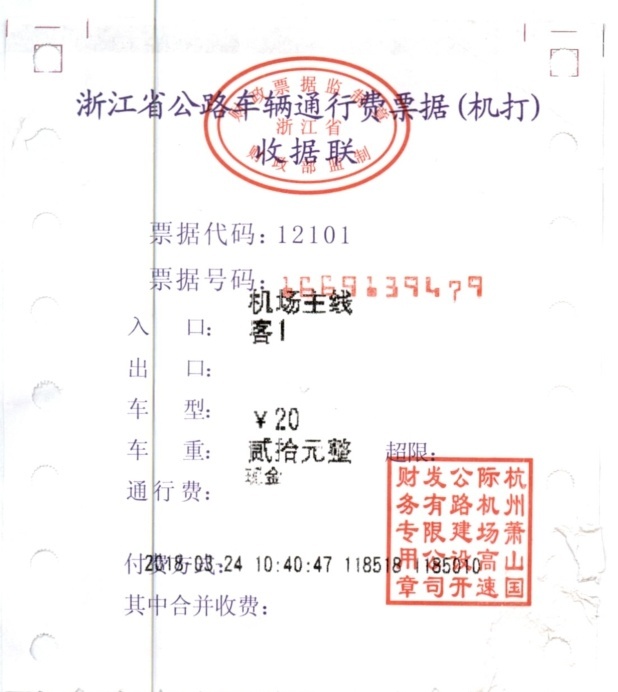

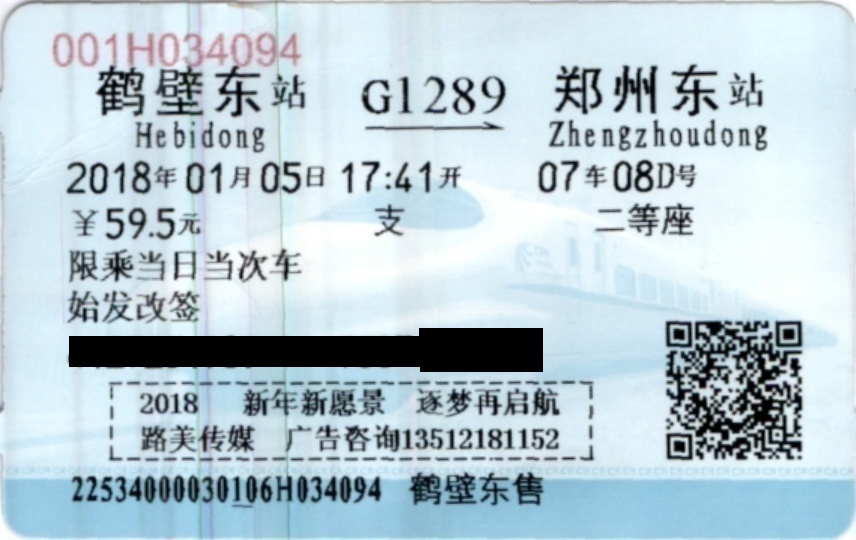

The dataset contains six types of invoices for algorithm verification. They are Taxi Invoice, Train Invoice, Passenger Invoice, Toll Invoice, Air Itinerary Invoice and Quota Invoice. All images have been were desensitized. Some visualization examples are shown as follows.

Air Ticket General Quota Invoice Taxi Invoice

Passenger Transport Invoice Toll Invoice Train Ticket Annoations

We provide two types annotation files for the training data, ocr.json and gt.json :

ocr.json

This file contains the annotations for each text instance's location and content, defined as follows:

"abf3b61f-cefe-374e-2ace-ac1fbdf3f3af_1.jpg": { "height": 891, "width": 1245, "content_ann": { "texts": [ "112002070106", "12921503", "壹佰元整", "###", ... ] "bboxes": [ [ 453, 338, 830, 328, 832, 383, 454, 393 ], [ 446, 411, 739, 406, 741, 466, 448, 473 ], [ 462, 603, 809, 595, 812, 683, 464, 693 ], [ 428, 347, 883, 364, 882, 709, 419, 710 ], ... ] }, },where,

- texts: text content annotations for each text instance,

- bboxes: location for each text instance,

gt.json

This file contains the entity annotation groundtruth. An example corresponding to the above ocr.json is as follows:

"abf3b61f-cefe-374e-2ace-ac1fbdf3f3af_1.jpg": { "发票代码":112002070106, "发票号码":12921503, "金额":"壹佰元整", } -

Submission

To submit your results to the leaderboard, you must construct the submission in a ***.zip file that contains a single json file in utf-8 encoding containing the model's results on the validation or test set.

The keys in the submission json denote the file names and the values are the models' predicted results for evaluation. The required items for different types of invoice are different, as shown below:

A sample submission file can be downloaded here."file_name.jpg": { "日期": "", "金额": "", "始发站":"", "到达站": "", "保险费": "" }"file_name.jpg": { "发票代码": "", "发票号码":"", "金额":"" }"file_name.jpg": { "发票代码":"", "发票号码":"", "金额":"", "日期":"" }Air Ticket General Quota Invoice Taxi Invoice "file_name.jpg": { "日期":"", "始发站":"", "到达站":"", "金额":"" }"file_name.jpg": { "发票代码":"", "发票号码":"", "金额":"", "入口":"", "出口":"" }"file_name.jpg": { "日期":"", "金额":"", "始发站":"", "到达站":"", "座位类型":"" }Passenger Transport Invoice Toll Invoice Train Ticket -

Evaluation

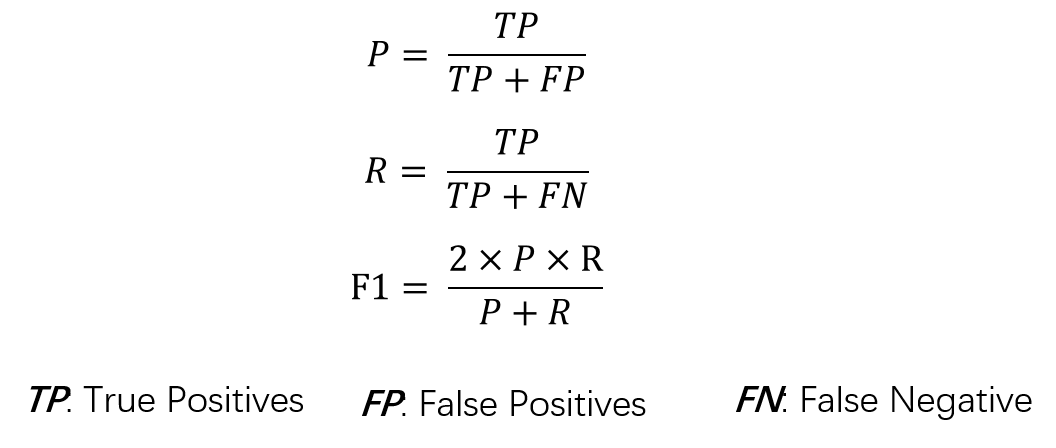

For each test invoice image, the extracted text is compared to the ground truth. An extract text is marked as correct if both submitted content and category of the extracted text matches the groundtruth; Otherwise, marked as incorrect. The precision is computed over all the extracted texts of all the test invoice images. F1 score is computed based on precision and recall. F1 score will be used for ranking.

-

Terms and Condition

Team Rules

1. Participants: The competition is open to the recruitment of teams from all over the world, regardless of age and nationality. Employees from universities, research institutes and enterprises can log on to the official website to register for the competition.

2. Team registration requirements: all participants should complete the team by themselves before the deadline, and submit the results of each stage as a team, one person is only allowed to join one team.

3. Submission requirements: Since CodaLab cannot submit personal real-name verification, there is no limit on the account or the number of times of submission for the results of A list. Teams participating in the B-ranking are required to provide team members' information and team accounts to the organizer. Each team is allowed to submit results through only one account. During the B-ranking period, a team has a maximum of 10 opportunities to submit results. The organizing committee of the competition promises to keep confidential the contents involving personal privacy.

4. Avoidance principle: The people who set the questions and their departments are forbidden to participate in the questions issued by the competition (they can participate in other questions). The staff who are directly involved in the planning, organization and technical services of the competition are forbidden to participate in the competition.Submission Rules

1. Original works: The entries must be original, not in violation of any relevant laws and regulations of the People's Republic of China, and not infringe on any third party intellectual property rights or other rights. Once discovered or verified by the right holder, the organizing committee will cancel the competition qualification and results and take serious punishment.

2. Intellectual property rights of the works: The intellectual property rights of the works (including but not limited to algorithms, models, schemes, etc.) shall be shared by the project maker, the participants, and the competition organizer platform. The competition organizer has the priority to organize, invest and connect the works and provide product incubation services. The organizer and the competition platform have the right to use the entries, competition information and team information in promotional materials, related publications, formulated and authorized media release, official website browsing and downloading, exhibitions (including traveling exhibitions) and other activities;

3. Description of competition Data: The organizing Committee authorizing the participants to use the data provided for model training of the specified competition. The copyright of the data set of this competition belongs to Davar-Lab of Hikvision Research Institute. The data set can only be downloaded through official channels and used in non-commercial scenarios, please do not disseminate in other channels.

4. Compliance of works: All teams shall ensure compliance of their works. If any of the following or other major violations occur, the qualification and results of the teams will be cancelled after consultation by the organizing committee, and the list of winning teams will be extended successively. Major violations are as follows: a. Using a trumpet, colluding, plagiarizing code of others and other suspected violations and cheating; b. Only open source data sets are allowed for external data, and additional private data are not allowed; c. Materials submitted by the team are incomplete, or any false information is submitted; d. The team is unable to provide a convincing explanation for the doubts about the work; e. Submission of work containing unhealthy, obscene, pornographic or defamatory content of any third party and other major violations.Results Verification

In order to ensure the fairness of the competition, participants are required to provide their unique team account information and team personnel information via email as required by the organizer during the opening period of the B-ranking. Unverified accounts will not be included in the final ranking.

After the end of the B-ranking, the top 10 teams are required to participate in the review of their results. During the review, the teams are required to provide source code and documentation to ensure the authenticity and validity of their results.

The valid team will enter the final defense, and the final defense will take B ranking score (70%) and algorithm innovation, effectiveness, rationality and other considerations (30%) into consideration to get the final ranking.If there is any problem,please mail to qiaoliang6@hikvision.com 。

-

Organizers

Hikvision Research Insititute

School of Computer Science and Technology, Fudan University

School of Computer Science and Technology, Hainan University