MANGO: A Mask Attention Guided One-Stage Scene Text Spotter

Abstract

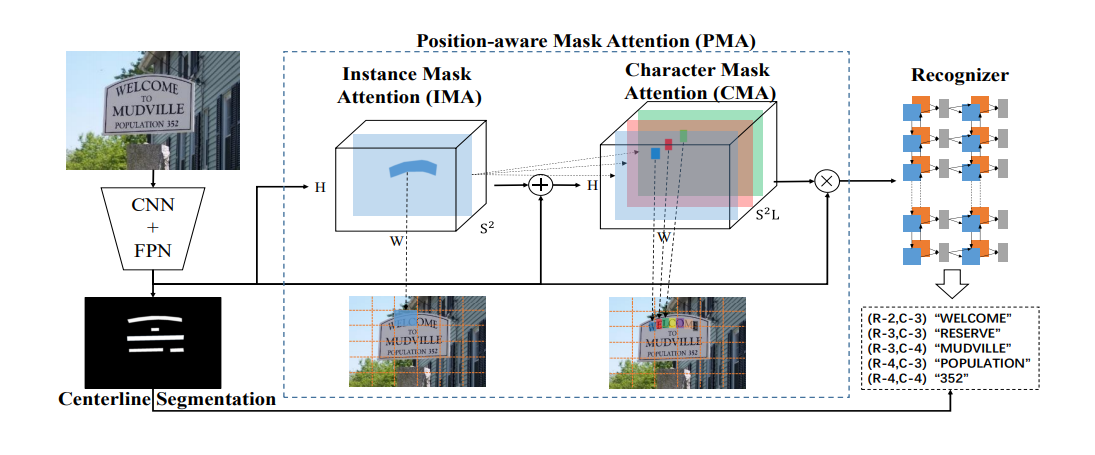

Recently end-to-end scene text spotting has become a popular research topic due to its advantages of global optimization and high maintainability in real applications. Most methods attempt to develop various region of interest (RoI) operations to concatenate the detection part and the sequence recognition part into a two-stage text spotting framework. However, in such framework, the recognition part is highly sensitive to the detected results (\emph{e.g.}, the compactness of text contours). To address this problem, in this paper, we propose a novel Mask AttentioN Guided One-stage text spotting framework named MANGO, in which character sequences can be directly recognized without RoI operation. Concretely, a position-aware mask attention module is developed to generate attention weights on each text instance and its characters. It allows different text instances in an image to be allocated on different feature map channels which are further grouped as a batch of instance features. Finally, a lightweight sequence decoder is applied to generate the character sequences. It is worth noting that MANGO inherently adapts to arbitrary-shaped text spotting and can be trained end-to-end with only coarse position information (\emph{e.g.}, rectangular bounding box) and text annotations. Experimental results show that the proposed method achieves competitive and even new state-of-the-art performance on both regular and irregular text spotting benchmarks, i.e., ICDAR 2013, ICDAR 2015, Total-Text, and SCUT-CTW1500. [Paper]Highlights Contributions

❃ We propose a compact and robust one-stage text spotting framework named MANGO that can be trained in an endto-end manner.

❃ We develop the position-aware mask attention module to generate the text instance features into a batch, and build the one-to-one mapping with final character sequences. The module can be trained with only rough text position information and text annotations.

❃ Extensive experiments show that our method achieves competitive and even state-of-the-art results on both regular and irregular text benchmarks.

Recommended Citations

If you find our work is helpful to your research, please feel free to cite us:

@inproceedings{DBLP:conf/aaai/QiaoCCXNPW21,

author = {Liang Qiao and

Ying Chen and

Zhanzhan Cheng and

Yunlu Xu and

Yi Niu and

Shiliang Pu and

Fei Wu},

title = {{MANGO:} {A} Mask Attention Guided One-Stage Scene Text Spotter},

booktitle = {AAAI},

pages = {2467--2476},

year = {2021},

}