TRIE: End-to-End Text Reading and Information Extraction for Document Understanding

Abstract

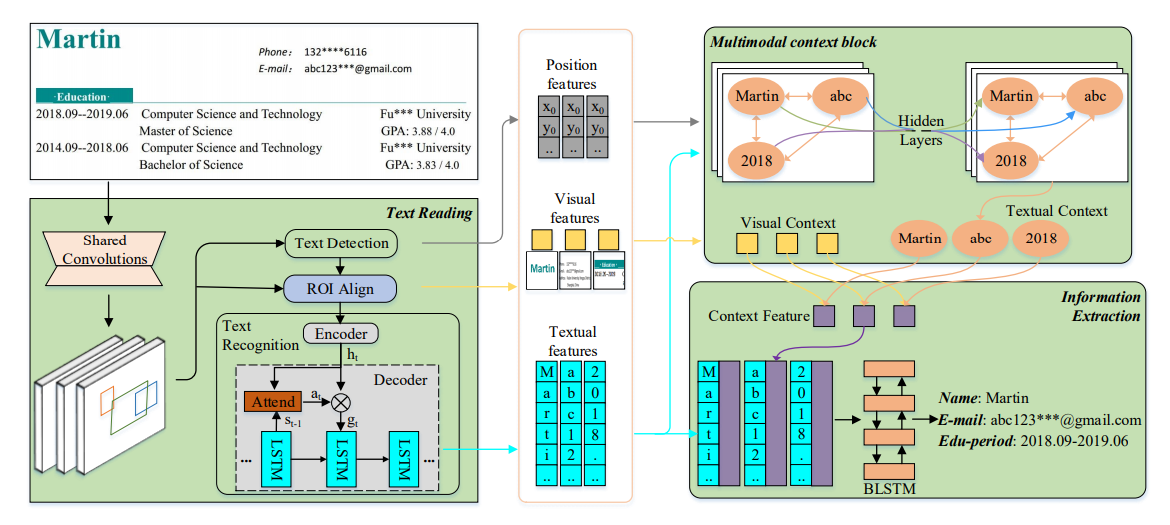

Since real-world ubiquitous documents (e.g., invoices, tickets, resumes and leaflets) contain rich information, automatic document image understanding has become a hot topic. Most existing works decouple the problem into two separate tasks, (1) text reading for detecting and recognizing texts in images and (2) information extraction for analyzing and extracting key elements from previously extracted plain text. However, they mainly focus on improving information extraction task, while neglecting the fact that text reading and information extraction are mutually correlated. In this paper, we propose a unified end-to-end text reading and information extraction network, where the two tasks can reinforce each other. Specifically, the multimodal visual and textual features of text reading are fused for information extraction and in turn, the semantics in information extraction contribute to the optimization of text reading. On three real-world datasets with diverse document images (from fixed layout to variable layout, from structured text to semi-structured text), our proposed method significantly outperforms the state-of-the-art methods in both efficiency and accuracy. [Paper]Highlights Contributions

❃ We propose an end-to-end trainable framework for simultaneous text reading and information extraction in VRD understanding. The whole framework can be trained end-to-end from scratch, with no need of stagewise training strategies.

❃ We design a multimodal context block to bridge the OCR and IE modules. To the best of our knowledge, it is the first work to mine the mutual influence of text reading and information extraction.

❃ We perform extensive evaluations on our framework and show superior performance compared with the state-of-the-art counterparts both in efficiency and accuracy on three real-world benchmarks. Note that those three benchmarks cover diverse types of document images, from fixed to variable layouts, from structured to semistructured text types.

Recommended Citations

If you find our work is helpful to your research, please feel free to cite us:

@inproceedings{zhang2020trie,

title={TRIE: End-to-End Text Reading and Information Extraction for Document Understanding},

author={Zhang, Peng and Xu, Yunlu and Cheng, Zhanzhan and Pu, Shiliang and Lu, Jing and Qiao, Liang and Niu, Yi and Wu, Fei},

booktitle={Proceedings of the 28th ACM International Conference on Multimedia},

pages={1413-1422},

year={2020},

organization={ACM}

}