Focusing attention: Towards Accurate Text Recognition in Natural Images

Abstract

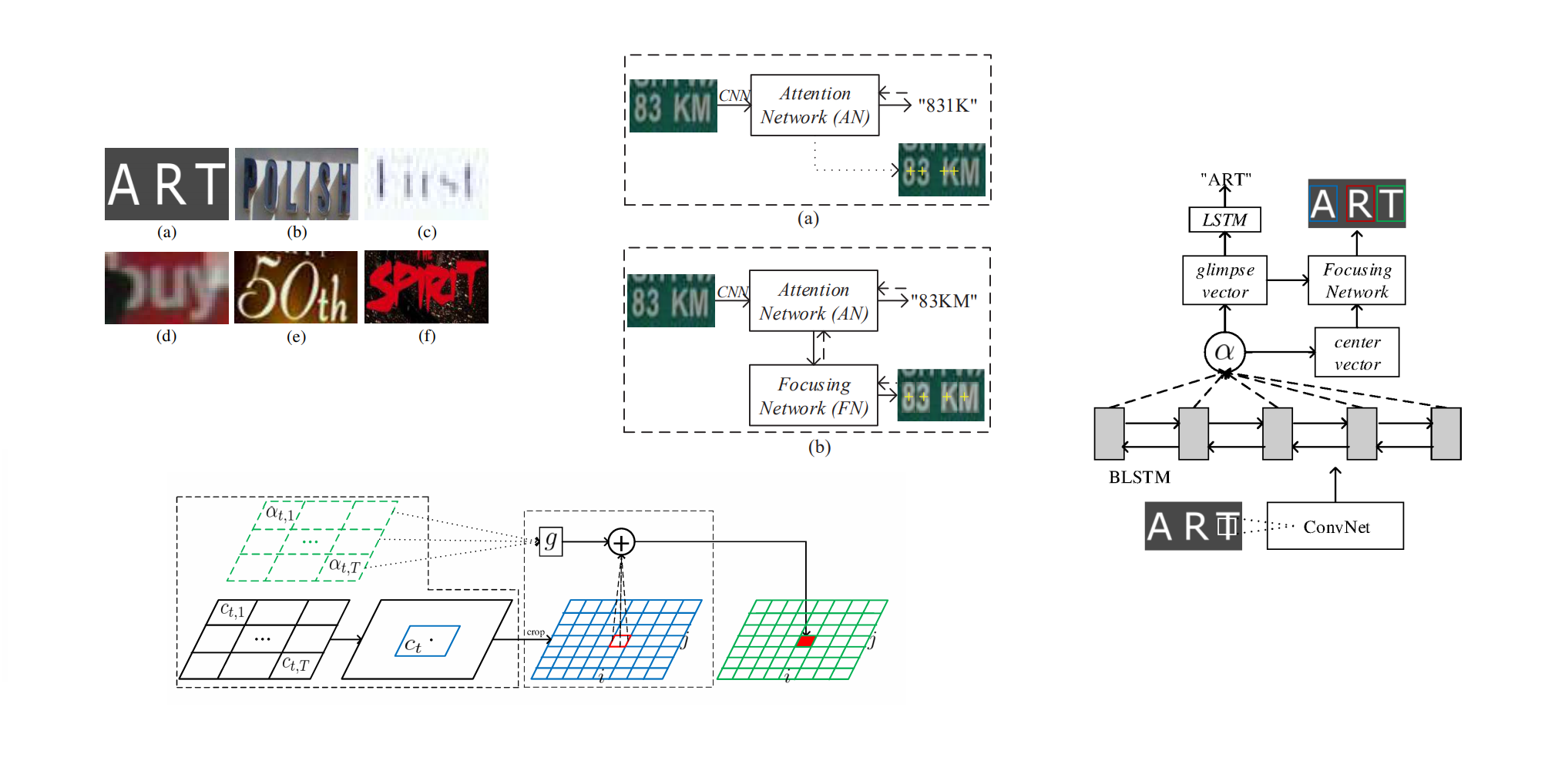

Scene text recognition has been a hot research topic in computer vision due to its various applications. The state of the art is the attention-based encoder-decoder framework that learns the mapping between input images and output sequences in a purely data-driven way. However, we observe that existing attention-based methods perform poorly on complicated and/or low-quality images. One major reason is that existing methods cannot get accurate alignments between feature areas and targets for such images. We call this phenomenon “attention drift”. To tackle this problem, in this paper we propose the FAN (the abbreviation of Focusing Attention Network) method that employs a focusing attention mechanism to automatically draw back the drifted attention. FAN consists of two major components: an attention network (AN) that is responsible for recognizing character targets as in the existing methods, and a focusing network (FN) that is responsible for adjusting attention by evaluating whether AN pays attention properly on the target areas in the images. Furthermore, different from the existing methods, we adopt a ResNet-based network to enrich deep representations of scene text images. Extensive experiments on various benchmarks, including the IIIT5k, SVT and ICDAR datasets, show that the FAN method substantially outperforms the existing methods. [Paper]Highlights Contributions

❃ We propose the concept of attention drift which explains the poor performance of existing attention based methods on complicated and/or low-quality natural images.

❃ We develop a novel method called Focusing Attention Network (FAN) to solve the attention drift problem, where in addition to the AN component existing in most existing methods, a completely new component FN is introduced, which can focus AN’s deviated attention back on the target areas.

❃ We adopt a powerful ResNet-based CNN to enrich deep representations of scene text images.

❃ We conduct extensive experiments on several benchmarks, which demonstrate the performance superiority of our method over the existing methods.

Recommended Citations

If you find our work is helpful to your research, please feel free to cite us:

@inproceedings{cheng2017focusing,

title={Focusing attention: Towards Accurate Text Recognition in Natural Images},

author={Cheng, Zhanzhan and Bai, Fan and Xu, Yunlu and Zheng, Gang and Pu, Shiliang and Zhou, Shuigeng},

booktitle={Proceedings of the IEEE International Conference on Computer Vision},

pages={5076--5084},

year={2017},

}