VSR: A Unified Framework for Document Layout Analysis combining Vision, Semantics and Relations

Abstract

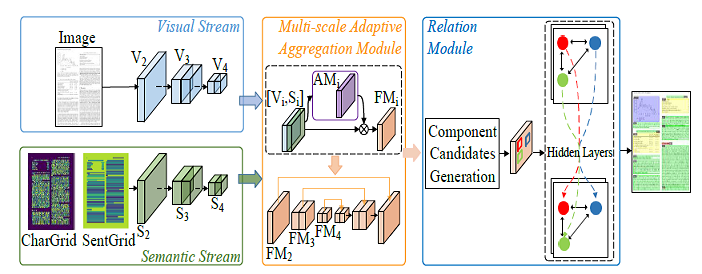

Document layout analysis is crucial for understanding document structures. On this task, vision and semantics of documents, and relations between layout components contribute to the understanding process. Though many works have been proposed to exploit the above information, they show unsatisfactory results. NLP-based methods model layout analysis as a sequence labeling task and show insufficient capabilities in layout modeling. CV-based methods model layout analysis as a detection or segmentation task, but bear limitations of inefficient modality fusion and lack of relation modeling between layout components. To address the above limitations, we propose a unified framework VSR for document layout analysis, combining vision, semantics and relations. VSR supports both NLP-based and CV-based methods. Specifically, we first introduce vision through document image and semantics through text embedding maps. Then, modality-specific visual and semantic features are extracted using a two-stream network, which are adaptively fused to make full use of complementary information. Finally, given component candidates, a relation module based on graph neural network is incorported to model relations between components and output final results. On three popular benchmarks, it outperforms previous models by large margins. [Paper]Highlights Contributions

❃ We propose a unified framework VSR for document layout analysis, combin- ing vision, semantics and relations.

❃ To exploit vision and semantics effectively, we propose a two-stream net- work to extract modality-specific visual and semantic features, and fuse them adaptively through an adaptive aggregation module. Besides, we also explore document semantics at different granularities.

❃ A GNN-based relation module is incorporated to model relations between document components, and it supports relation modeling in both NLP-based and CV-based methods.

❃ We perform extensive evaluations of VSR and on three public benchmarks, VSR shows significant improvements compared to previous models.

Recommended Citations

If you find our work is helpful to your research, please feel free to cite us:

@inproceedings{zhang2021vsr,

title={VSR: A Unified Framework for Document Layout Analysis combining Vision, Semantics and Relations},

author={Zhang, Peng and Li, Can and Qiao, Liang and Cheng, Zhanzhan and Pu, Shiliang and Niu, Yi and Wu, Fei},

booktitle = {ICDAR},

volume = {12821},

pages = {115--130},

year={2021},

}