Text Perceptron: Towards End-to-End Arbitrary-Shaped Text Spotting

Abstract

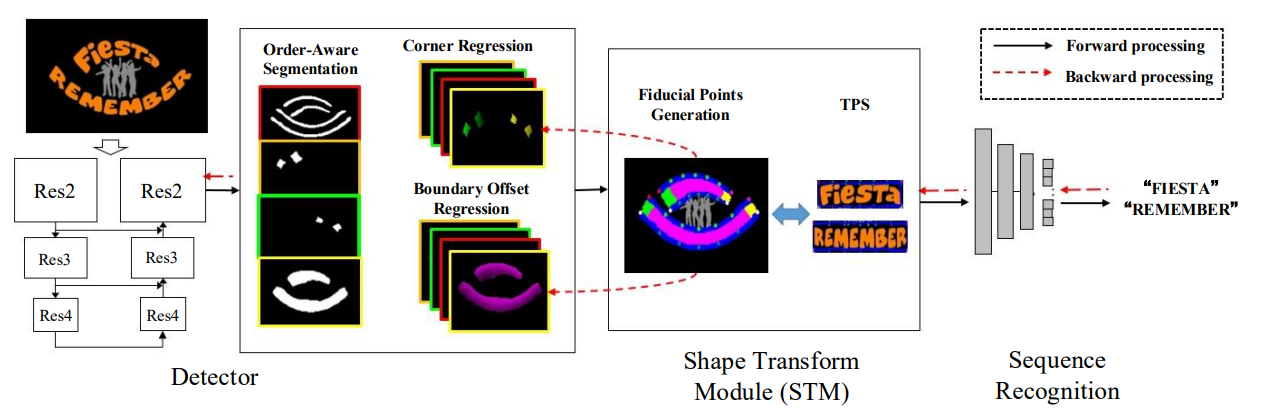

Many approaches have recently been proposed to detect irregular scene text and achieved promising results. However, their localization results may not well satisfy the following text recognition part mainly because of two reasons: 1) recognizing arbitrary shaped text is still a challenging task, and 2) prevalent non-trainable pipeline strategies between text detection and text recognition will lead to suboptimal performances. To handle this incompatibility problem, in this paper we propose an end-to-end trainable text spotting approach named Text Perceptron. Concretely, Text Perceptron first employs an efficient segmentation-based text detector that learns the latent text reading order and boundary information. Then a novel Shape Transform Module (abbr. STM) is designed to transform the detected feature regions into regular morphologies without extra parameters. It unites text detection and the following recognition part into a whole framework, and helps the whole network achieve global optimization. Experiments show that our method achieves competitive performance on two standard text benchmarks, i.e., ICDAR 2013 and ICDAR 2015, and also obviously outperforms existing methods on irregular text benchmarks SCUT-CTW1500 and Total-Text. [Paper] [Code] [Supplementary] [Poster] [Video]Highlights Contributions

❃ We design an efficient order-aware text detector to extract arbitrary-shaped text.

❃ We develop the differentiable Shape Transform Module (STM) devoting to optimizing both detection and recognition in an end-to-end trainable manner.

❃ Our extensive experiments show that our method achieves competitive results on two regular text benchmarks, and also significantly surpasses previous methods on two irregular text benchmarks

Recommended Citations

If you find our work is helpful to your research, please feel free to cite us:

@inproceedings{qiao2020text,

title={Text Perceptron: Towards End-to-End Arbitrary-Shaped Text Spotting},

author={Qiao, Liang and Tang, Sanli and Cheng, Zhanzhan and Xu, Yunlu and Niu, Yi and Pu, Shiliang and Wu, Fei},

booktitle={Proceedings of the Thirty-Fourth AAAI Conference on Artificial Intelligence},

pages={11899-11907},

year={2020},

}